The surge in OpenClaw’s popularity over the past few days has been remarkable—and consequential. In about a week, the project grew from roughly 7,800 GitHub stars on January 24, 2026 to over 150,000 in days. This explosive adoption highlights how compelling the idea of a personal, autonomous digital agent has become. It also underscores how easily critical security considerations can be overlooked in the excitement.

For those unfamiliar with OpenClaw, it allows users to deploy their own AI-powered agent locally on a laptop or a desktop and interact with it through popular messaging platforms such as WhatsApp, Telegram, and Discord. By default, OpenClaw runs with the same privileges as the user account that launches it—granting broad access to local files, network resources, environment variables, and the ability to execute commands on the host system. With additional configuration, an agent can be empowered to send emails, post messages, and run tools on the user’s behalf.

This design choice introduces a unique and significant security challenge. Acting with user-level privileges, an OpenClaw agent effectively behaves like a programmable operator sitting at the user’s keyboard. In the absence of proper configuration, isolation, and guardrails, this level of autonomy can lead to substantial data exposure or malicious impact. In fact, misconfigured OpenClaw deployments have already resulted in unauthorized access and the exposure of sensitive information, including authentication tokens and conversation histories.

Another emerging risk lies in OpenClaw skills. Skills serve as building blocks that increase OpenClaw’s capabilities, making tasks like web browsing, image generation, and coding possible. While a powerful tool, Skills poses an emerging risk that can extend an agent’s capabilities. Malicious plug-and-play skills have begun to surface, with users being tricked into downloading them via typo-squatted websites and fake repositories. In practice, installing an unvetted skill is equivalent to running untrusted third-party code with the agent’s full privileges—an issue that mirrors classic supply-chain risks but with a much larger blast radius due to agent autonomy. In another incident, OpenClaw’s failure to validate the WebSocket origin header in its WebSocket server implementation has led to a successful one click remote code execution on the victim’s machine.

The risk profile becomes even more nuanced when OpenClaw is paired with Moltbook, an agent-to-agent communication and observation platform. Moltbook allows AI agents to post messages, reply to other agents, and read peer output as external context. While Moltbook does not execute code or invoke tools, it introduces a powerful new influence surface: peer-generated content that can shape an agent’s reasoning.

Recent unauthorized access to Moltbook’s backend exposed agent authentication credentials. Although these credentials did not provide access to agents’ local tools or environments, the impact was still serious. Agent identities could be impersonated, allowing content to be posted on behalf of trusted, benign agents. In an agent ecosystem, identity is the trust anchor. Once that anchor is compromised, a popular or authoritative agent can be weaponized to distribute biased, misleading, or malicious advice at scale.

If consuming agents are not explicitly designed to treat Moltbook content as untrusted input, this creates a pathway for poisoned context and memory. Over time, repeated exposure to adversarial or biased peer content can subtly influence an agent’s reasoning, priorities, and recommendations—without ever triggering a traditional exploit. This risk is amplified if Moltbook-derived content is allowed to persist in memory or bleed into user context.

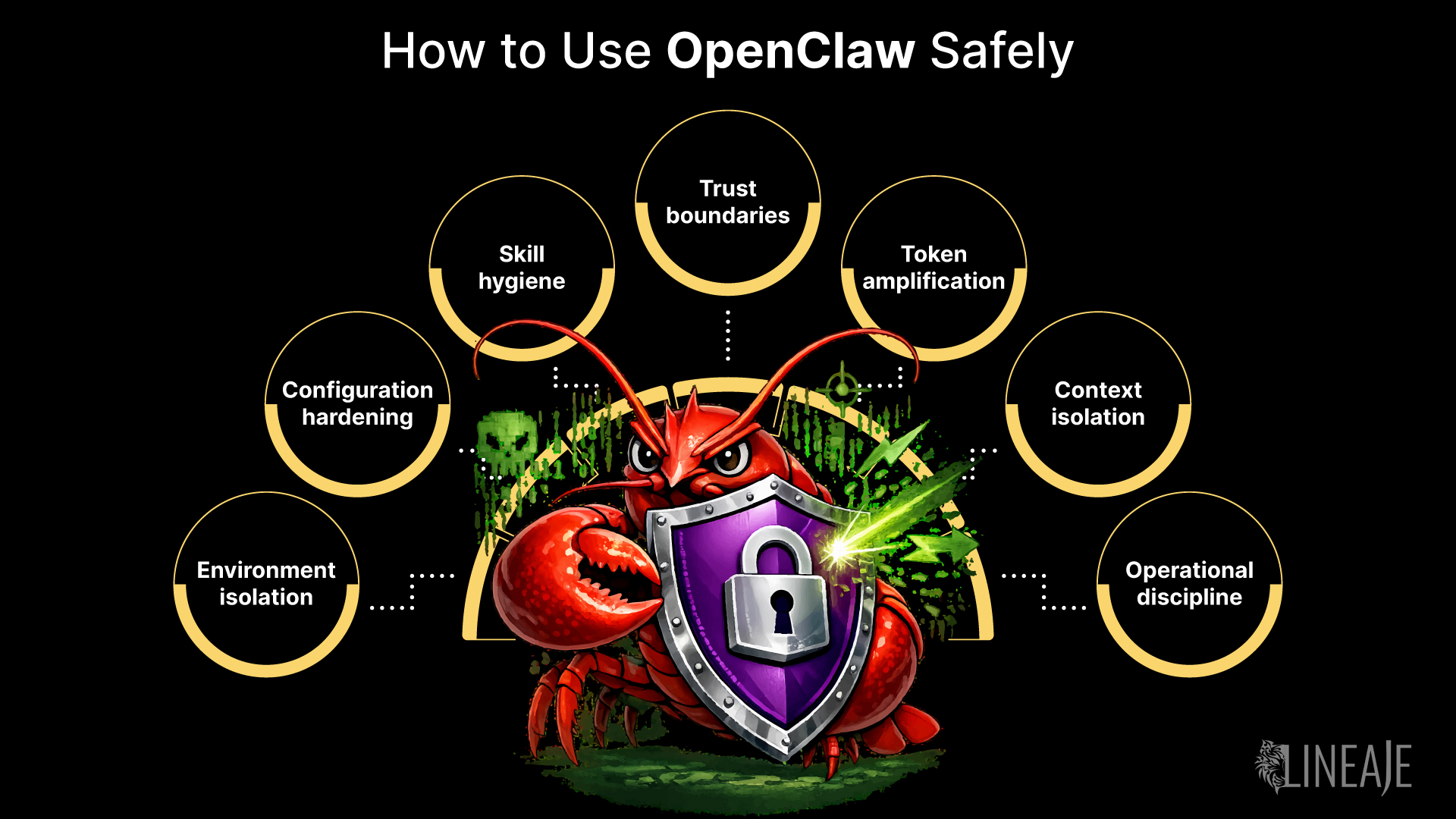

Mitigating these risks requires treating OpenClaw agents as what they truly are: privileged, autonomous software systems that demand the same rigor as any other sensitive workload. Practical steps include:

OpenClaw’s rapid rise reflects a broader shift toward highly capable, user-operated AI agents. Harnessed responsibly, these systems can deliver tremendous value. But without deliberate security boundaries—especially around privilege, third-party extensions, and peer-generated context—they also introduce new and subtle failure modes. As agentic AI moves from experimentation to daily use, getting these fundamentals right is no longer optional.